Camden’s Public Schools and the Making of an Urban “Lost Cause”

Noelle Cascarelli

In modern-day America, there is perhaps no city quite as infamous as Camden, New Jersey. A relatively-small urban community situated along the banks of the Delaware River, directly across from the sprawling, densely-populated urban metropolis of Philadelphia, in any other world, Camden would likely be a niche community, familiar only to those in the immediate surrounding area. However, the story of Camden is perhaps one of the greatest instances of institutional collapse and urban failure in modern America, akin to the catastrophes that befell communities such as Detroit, Michigan and Newark, New Jersey throughout the mid-twentieth century.

Once an industrial juggernaut, housing powerful manufacturing corporations such as RCA Victory and the New York Shipbuilding Corporation, Camden was perhaps one of the urban communities most integral to the American war effort and eventual victory in the Pacific Theatre in World War II. However, in the immediate aftermath of the war, Camden experienced significant decline, its once-prosperous urban hub giving way to a landscape of disinvestment, depopulation, and despair. By the late twentieth century – specifically the 1980s and 1990s – Camden had devolved into a community wracked by poverty, crime, and drug abuse, bearing the notorious label “Murder City, U.S.A.” – a moniker which characterized decades of systemic inequity and institutional discrimination as a fatalistic narrative, presenting Camden as a city beyond saving, destined for failure. However, Camden’s decline was neither natural nor inevitable but rather, was carefully engineered through public policy. Through a calculated and carefully-measured process of institutional segregation and racial exclusion, state and city lawmakers took advantage of Camden’s failing economy and evaporating job market to confine communities of color to deteriorating neighborhoods, effectively denying them access to the educational and economic opportunities that had been afforded to white suburbanites in the surrounding area.

This paper focuses chiefly on Camden’s educational decline and inequities, situating the former within a broader historical examination of postwar urban America. Utilizing the historiographical frameworks of Arnold Hirsch, Richard Rothstein, Thomas Sugrue, and Howard Gillette, this research seeks to interrogate and illustrate how segregation and suburbanization functioned as reinforcements of racial inequity, and how such disenfranchisement created the perfect storm of educational failure in Camden’s public school network. The work of these scholars demonstrates that Camden’s neighborhoods, communities, and schools were intentionally structured to contain, isolate, and devalue communities and children of color, and that these trends were not unintended byproducts of natural spatial migration nor economic development. Within this context, it is clear that public education in the city of Camden did not simply mirror urban segregation, but rather institutionalized it as schools became both a reflection and reproduction of the city’s racial geography, working to entrench the divisions drawn by policymakers and real estate developers into a pervasive force present in all facets of life and human existence in Camden.

In examining the influence of Camden’s segregation on public education, this study argues that the decline of the city’s school system was not merely a byproduct, but an engine of institutional urban collapse. The racialized inequitable geography of public schooling in Camden began first as a willful and intentional byproduct of institutional disenfranchisement and administrative neglect, but quickly transformed into a self-fulfilling prophecy of failure, as crumbling school buildings and curricular inequalities became manifestations of policy-driven failure, and narratives of students of color as “inferior” were internalized by children throughout the city. Media portrayals of the city’s school system and its youth, meanwhile, transformed these failures into moral statements and narratives, depicting Camden’s children and their learning communities as symbols of inevitable dysfunction rather than victims of institutional exclusion. Thus, Camden’s transformation into the so-called “Murder Capital of America” was inseparable from the exclusionary condition of the city’s public schools, as they not only bore witness to segregation, but also became its most visible proof and worked to inform fatalistic narratives of the city and moral character of its residents.

Historiography

Historians of postwar America have long since established an understanding of racial and socioeconomic as essential to the development of the modern American urban and suburban landscape, manufactured and carefully reinforced throughout the twentieth century by the nation’s political and socioeconomic elite. Foundational studies include Arnold Hirsch’s “Making the Second Ghetto: Race and Housing in Chicago” (1983) and Richard Rothstein’s 1977 text, The Color of Law: A Forgotten History of How Our Government Segregated America serve to reinforce such traditional understandings of postwar urban redevelopment and suburban growth, situating the latter as the direct result of institutional policy, rather than mere byproducts and results of happenstance migration patterns.[1] In The Color of Law, Rothstein explores the role of federal and state political institutions in the codification of segregation through intergenerational policies of redlining, mortgage restrictions, and exclusionary patterns in the extension of mortgage insurance to homeowners along racial lines. In particular, Rothstein focuses on the Federal Housing Administration’s creation of redlining maps, which designated majority Black and Hispanic neighborhoods as high-risk “red zones,” effectively denying residents from these communities home loans, thus intentionally erecting barriers to intergenerational wealth accumulation through homeownership in suburban communities such as Levittown, Pennsylvania.[2]

Hirsch’s “The Making of the Second Ghetto” echoes this narrative of urban segregation as manufactured, primarily through the framework of his “second ghetto” thesis. Conducting a careful case study of Chicago through this framework, Hirsch argues that local municipalities, urban developers/planners, and the business elite of Chicago worked in tandem to enact policies of “domestic containment,” wherein public housing projects were weaponized against Black and Hispanic communities to reinforce racial segregation throughout the city. Utilizing public housing as an anchor rather than tool of mobility, Chicago’s socioeconomic and political elite effectively conspired at the institutional level with one another to confine Black Chicagoans to closely-regulated low-income communities, devaluing land and property values in these areas whilst zoning more desirable land for redevelopment and suburban growth, thereby manually raising housing and movement costs to a level that Black Americans were simply unable to afford due to the aforementioned devaluation of their own communities as well as generational barriers to wealth accumulation.[3] Chris Rasmussen’s “Creating Segregation in an Era of Integration” applies such narratives to a close investigation of New Brunswick, New Jersey, particularly in regards to educational segregation, investigating how city authorities utilized similar institutional frameworks of racial separation to confine students to segregated schools and resist integration (school zoning, prioritization of white communities and schools for development, and segregationist housing placements), working off of the existing community segregation detailed by the work of Rothstein and Hirsch. [4]

Working in tandem with historical perspectives of segregation as integral to the development of suburban America and subsequent urban decline, historians have also identified disinvestment as a critical economic process integral to the exacerbation of urban inequality, and eventual decay. Beginning in the postwar era, specifically in the aftermath of World War II and suburban development, industrial urban communities faced significant shortages in employment in the manufacturing sectors, as corporations began to outsource their labor to overseas and suburban communities, often following the migration of white suburbanites. Robert Beauregard’s Voices of Decline: The Post-War Fate of U.S. Cities diverges from the perspectives of Hirsch and Rothstein, citing declining employment opportunities and urban disinvestment as the most important factor in the decline of urban America on a national scale. Beauregard argues that by framing the disinvestment of urban wartime industrial juggernauts such as Newark, Camden, and Detroit as an “inevitability” in the face of rapid deurbanization and the growth of suburban America, policymakers at the national and local levels portrayed urban decline as a natural process, as opposed to a deliberate conspiracy to strip employment opportunities and the accumulation of capital from urban communities of color, even before suburbanization began to occur on a large scale.[5] Thomas Sugrue’s Origins of the Urban Crisis: Race and Inequality in Postwar Detroit also adheres to this perspective, situating economic devastation in the context of the development of racially-exclusive suburban communities, thereby working to tie existing scholarship and the multiple perspectives expressed here together, crafting a comprehensive narrative of urban decline in mid-twentieth century America as recurrent in nature, a cycle of unemployment, abject poverty, and a lack of opportunity that was reinforced by public policy and social programs that in theory, were supposed to alleviate such burdens.[6]

Ultimately, while these sources focus on differing aspects of urban decline, they all work in tandem with one another to allow for a greater, comprehensive portrait of the causes of urban decay in postwar America, throughout the twentieth century. From deindustrialization to segregation and its influence on disparities in education, these sources provide absolutely essential context for an in-depth examination of the specific case study of Camden, New Jersey both in regards to the city itself, but also its public education system. While these sources may not all cite the specific example of Camden, the themes and trends identified each ring true and featured prominently in the story of Camden throughout this period.

However, this paper will function as a significant divergence from such pre-existing literature, positioning the failure of public education in Camden as a key factor in the city’s decline, rather than a mere byproduct. A common trend present in much of the scholarship discussed above is that educational failure is examined not as a contributing root to Camden’s decline (and certainly not an important one, when education is briefly discussed in this context), but rather as a visible, tangible marker of urban decay in the area. While this paper does not deny the fact that failures in education are certainly rooted in fundamental inequity in urban spaces and broader social failings, it instead seeks to position Camden’s failing education state as not only a result of urban decline, but as a contributor – specifically by engaging in a discussion of how educational failure transformed narratives around Camden as a failed urban community, beyond help and destined for ruin. In doing so, this paper advances a distinct argument: that Camden’s educational collapse must be understood not merely as evidence of urban decline, but as a foundational force that actively shaped—and in many ways intensified—the narrative of Camden as a city fated for failure.

Suburban Exodus and the Collapse of Urban Schooling

Prior to launching into an exploration of Camden’s public schooling collapse and the influence of such failures of institutional education on the city’s reputation and image, it is important to first establish a clear understanding of the context of such shortcomings. Due to this paper’s focus specifically on the institutional failure of Camden’s public schooling system, and how such failures shaped perceptions around the city as an urban lost cause, this section will focus primarily on rising rates of racial segregation in the mid-twentieth century, both within city limits and beyond, specifically in regards to Camden County’s sprawling network of suburban communities. While the factors of deindustrialization, economic failure, and governmental neglect absolutely do factor into the creation of an urban environment situated against educational success, racial segregation was chiefly responsible for the extreme disparities found in educational outcomes through the greater Camden region, and is most relevant to this paper’s discussion of racialized narratives of inevitable urban failure that proved to be so pervasive on a national scale regarding Camden, both within the mid-to-late twentieth century and into the present day.

Such trends date back to massive demographic transitions of the pre–World War II era was the Great Migration – the mass movement of Black Americans to northern industrial cities. Drawn by the promise of stable employment and the prospect of greater freedom and equality than was available in the Jim Crow South, millions of migrants relocated to urban centers along the Northeastern seaboard. Camden, New Jersey, was among these destinations, attracting a growing Black population throughout the early twentieth century due to its concentration of manufacturing giants such as RCA Victor, the New York Shipbuilding Corporation, and Campbell’s Soup.[7] With the outbreak of war in Europe in 1939—and especially following the United States’ entry into World War II after Pearl Harbor—industrial production in Camden surged. The city soon emerged as a vital hub of wartime manufacturing and domestic production, cementing its status as a key center of American industrial might.

As a direct result of its industrial growth and expanding wartime economy, Camden continued to attract both Black Americans and new immigrant populations, many of whom were of Latino descent. Among these groups were large numbers of Stateside Puerto Ricans, continuing a trend of immigration dating back to the 1917 extension of U.S. citizenship to Puerto Ricans.[8] Motivated by many of the same factors as Black migrants—chiefly the pursuit of steady employment and improved living conditions—these communities helped shape Camden into a diverse and vibrant urban center. The city’s population of color expanded rapidly during this period, its growth driven by wartime prosperity and the allure of industrial opportunity.

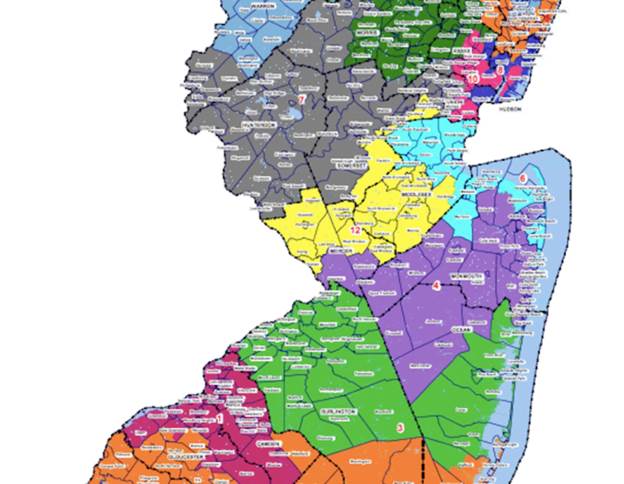

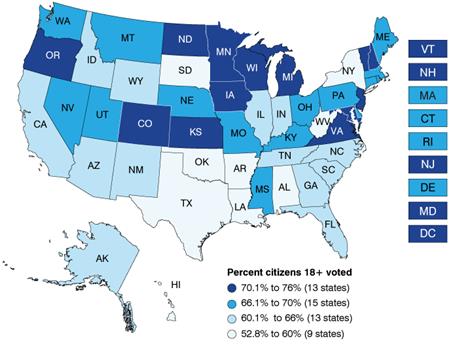

Following American victory in the Pacific and the end of World War II, Camden continued to experience rapid economic growth, although tensions arose between the city’s residents during this period along racial-ethnic lines. With the common American enemy of Japan and the Nazis firmly removed from the picture, hostilities began to turn inwards, and racial tensions skyrocketed, especially in the dawn of the Civil Rights Movement. As historian Chriss Rasmussen writes in “Creating Segregation in the Era of Integration: School Consolidation and Local Control in New Brunswick, New Jersey, 1965-1976”, “While Brown and the ensuing civil rights movement pointed toward racial integration, suburbanization forestalled racial equality by creating and reinforcing de facto segregation. As many whites moved to the suburbs, blacks and Latinos remained concentrated in New Jersey’s cities.”[9] Thus, as Black Americans increasingly emerged victorious in the fight against racial injustice and began to accumulate more and more rights and legal protections, city-dwelling white Americans grew increasingly fearful and resentful, spurring a mass exodus from urban population centers – including Camden. Drawn by federally backed mortgages, the expansion of highways, and racially exclusive housing policies,[10] white residents moved to neighboring suburbs such as Cherry Hill, Haddonfield, and Pennsauken, while structural barriers effectively excluded Black and Latino residents from the same opportunities. Leaving for the suburbs in droves, white residents fled from Camden, taking significant wealth and capital, as well as major business with them, thus weakening the city’s financial base and leaving workers—particularly people of color—vulnerable to unemployment.[11]

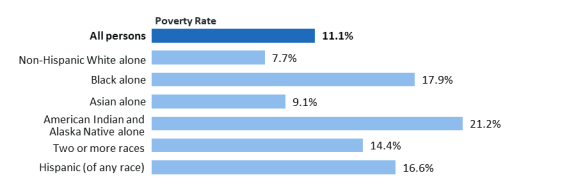

Public and private institutions increasingly withdrew resources from neighborhoods perceived as declining or racially changing and banks engaged in redlining, denying mortgages and loans to residents in nonwhite neighborhoods, while city budgets prioritized the needs of more affluent suburban constituencies over struggling urban areas.[12] Businesses and developers often chose to invest in suburban communities where white families were relocating, rather than in Camden itself, creating a feedback loop of declining property values, eroding tax revenue, and worsening public services. As historian Robert Beauregard writes in Voices of Decline: The Postwar Fate of U.S. Cities, “…while white middle-class and young working-class households had resettled in suburban areas, elderly and minority and other low-income households remained in the central cities. This increased the demand for basic public services (e.g. education) while leaving city governments with taxpayers having lower earnings and less property to tax.”[13] Thus, Camden residents left behind within the confines of the city became increasingly dependent on social welfare programs, which local and state governments began to fund less and less. This combination of economic retrenchment, racialized perceptions of neighborhood “desirability,” and policy-driven neglect fueled a cycle of disinvestment that disproportionately affected communities of color, leaving the city structurally disadvantaged.[14]

Concerns about racial integration in neighborhoods and schools also motivated many families to leave, as they sought communities aligned with their social and economic preferences. Such demographic change was rapid, and by 1950 approximately 23.8 percent of Camden City’s population was nonwhite.[15] While that figure may not seem extreme to the modern American, an individual likely familiar with diverse communities and perspectives, it is particularly shocking when placed in the context of Camden’s surrounding suburbs: by 1950, the nonwhite population of Pennsauken was a mere 4.5 percent, 2.1 percent in Haddonfield, and an even lower 1.9 percent in Cherry Hill.[16] These figures in particular serve as an exemplary demonstration as to the cyclical nature of segregation in the educational sector within the state of New Jersey, contextualizing twentieth century segregation not as a unique occurrence, but rather a continuation of historical patterns. In the nineteenth century, the majority of the state’s schools were segregated along racial lines, and in 1863, New Jersey’s state government directly sanctioned the segregation of public school districts statewide. While such decisions would ultimately be reversed in 1881, active opposition to integration remained into the twentieth century, particularly within elementary and middle school education. For example, a 1954 study found that New Jersey schools, both historically and actively, “…had more in common with states below than above…” the Mason-Dixon line. Most notably however, by 1940, the state had more segregated schools than at any period prior to the passing of explicit anti-segregation legislation in 1881.[17] Thus, it is evident that the state of Camden’s schools in the mid-twentieth century is not an isolated incident, but rather indicative of the cyclical nature of racial separation and disenfranchisement throughout the state of New Jersey in an educational context.

These demographic and economic shifts had profound implications for Camden’s schools, which now served largely Black and Latino student populations. In particular, Blaustein’s work proves particularly valuable in demonstrating the catastrophic impacts of white flight on Camden’s schools, as well as the irreversible harm inflicted on students of color as a result of institutional failures in education. Writing in a 1963 report to then-President John F. Kennedy’s – a cautious supporter of the Civil Rights Movement – Civil Rights Commission, notable civil rights lawyer Albert P. Blaustein establishes a clear portrait of the declining state of Camden’s public schooling system, as well as the everyday issues facing students and educators alike in the classroom. In delivering a scathing report on neighborhood segregation within the city in Camden, as demonstrated by demographic data regarding the race/ethnicity of students enrolled in public education across the Camden metropolitan area, Blaustein writes:

Northeast of Cooper River is the area known as East Camden, an area with a very small Negro population. For the river has served as a barrier against intracity population…Two of the four junior high schools are located here: Davis, which is 4.0 percent Negro and Veterans Memorial which is 0.2 percent Negro. Also located in East Camden are six elementary schools, four of which are all-white and the other two of which have Negro percentages of 1.3 percent and 19.7 percent…Central Camden, on the other hand, is largely Negro. Thus, the high percentage of Negroes in Powell (100.0 percent), Sumner (99.8 percent), Fetters (91.6 percent), Liberty (91.2 percent), and Whittier (99.1 percent), etc.[18]

Based on the data provided here by Blaustein, it is simply impossible to argue that racial segregation did not occur in Camden. Additionally, it becomes quite clear that while much discussion regarding Camden public schools and wide demographic changes in the city as a whole focuses on the movement of white residents to suburban areas, racial segregation and stratification absolutely did occur within the city, thus worsening educational opportunities and learning outcomes for Camden’s students of color even more.

However, Blaustein does not end his discussion with segregation amongst student bodies, but rather extends his research even further to a close examination of racial/ethnic compositions of school leadership, including teachers, administrators, and school board members, yielding similar results. For example, according to his work, the Fetters School, possessing a student body of 91.6 percent Black students employed nine white teachers and nine Black teachers in 1960, but two white teachers and sixteen Black teachers in 1963. Even more shockingly, Central School, composed of 72.9 percent Black students, employed only white teachers in 1955. By 1963, just nine years later, this number had completely reversed and the school employed all Black educators.[19] Thus, Blaustein’s investigation of variances in Camden public schools’ racial composition reveal that this issue was not simply limited to education nor exclusionary zoning practices, but was rather an insidious demographic trend which had infested all areas of life in Camden, both within education and outside of classrooms. In ensuring that Black students were only taught by Black teachers and white students by white teachers, education in Camden was incredibly nondiverse, eliminating opportunities for cross-racial understanding nor exposure to alternative perspectives, thereby working to keep Black and white communities completely separate not just in the facets of residence and education, but also in interaction and socialization.

Manifestations of Failure in Camden Public Schools

With the existence of racial segregation both within Camden as well as the city’s surrounding area clearly established, we can now move to an exploration of inequalities in public education within the city. Perhaps one of the most visible and apparent markers of inequalities in public education in Camden can be found in school facilities and buildings. The physical conditions in which children of color were schooled were grossly and completely outdated, especially in comparison to the facilities provided to white children, both inside and outside of the city of Camden. For example, as of 1963, there were six specific public schools that had been cited as in dire need of replacement and/or renovation by Camden’s local legislative board, the vast majority of which were located in segregated communities: Liberty School (1856, 91.2% Black student population), Cooper School (1874, 30.7% Black student population), Fetters School (1875, 91.6% Black student population), Central School (1877, 72.9% Black student population), Read School (1887, 32.0% Black student population), and finally, Bergen School (1891, 45.6% Black student population).[20] Of the schools cited above, approximately half of the buildings that had been deemed by the city of Camden as unfit for usage and nonconducive to education were occupied by majority-Black student populations (Liberty, Fetters, and Central), whereas Bergen School was split just short of evenly between Black and white low-income students.

Additionally, it is important to acknowledge that these figures only account for the absolute worst of Camden’s schools, such trends in inadequate school buildings and facilities occurred throughout the city, in accordance with the general quality of infrastructure and housing present in each neighborhood they were located. In other words, while the data above only references a very small sample size of Camden’s schools, the trends reflected here (specifically, in the intentional zoning of Black students to old, run-down schooling facilities) serve as a microcosm of Camden’s public schools, wherein students of color were intentionally confined to older schools and run-down facilities.

Education researcher Jonathan Kozol expands on the condition of school facilities in Camden’s disenfranchised communities in his widely-influential book, Savage Inequalities. Written in 1991, Kozol’s work serves as a continuation of Blaustein’s discussion on the failing infrastructure of public education in Camden, providing an updated portrait into the classrooms serving the city’s poorest communities. Kozol pulls no punches in a truly visceral recollection of his visit to Pyne Point Middle School, writing:

…inside, in battered, broken-down, crowded rooms, teem the youth of Camden, with dysfunctional fire alarms, outmoded books and equipment, no sports supplies, demoralized teachers, and the everpresent worry that a child is going to enter the school building armed.[21]

Ultimately, it is inarguable that the physical quality of public schools and educational facilities in Camden was incredibly unequal, reflecting broader residential trends. Where poor, minority-majority neighborhoods experienced a degradation of property values and lived in dilapidated areas of the cities as a direct result of redlining and other racist housing policies, so too were children of color in Camden zoned into old, crumbling school buildings that by this time, barely remained standing, effectively stripping them of the same educational resources and physical comforts provided to white students both in the city and its neighboring suburbs.

Such inequalities were also present in records of student achievement and morale. Educated in barely-standing school buildings overseen by cash-strapped school districts, students of color in Camden’s poor communities were not afforded nearly the same learning opportunities nor educational resources as white students in the area. In Camden and Environs, Blaustein cites Camden superintendent Dr. Anthony R. Catrambone’s perspective on inequalities in education, writing, “…pupils from Sumner Elementary School (99.8 percent Negro) who transfer to Bonsall Elementary School (50.3 percent Negro) ‘feel unwanted, and that they are having educational problems not experienced by the Negroes who have all their elementary training at Bonsall’ [Catrambone’s words].”[22]

Thus, it is evident that inequalities in schooling facilities and instruction not only resulted in a considerable achievement gap between students in segregated and integrated communities, but also that such inequalities were clear and demonstrable, even to students themselves at the elementary level. Catrambone’s observation that students from Sumner felt “unwanted” and viewed themselves as struggling, suggests that students in Camden’s segregated neighborhoods internalized the city’s structural inequality, viewing themselves as lesser than their white/integrated peers both in intellectual capacity and personal character. Such perspectives, reinforced by the constant presence of systemic discrimination along racial lines as well as crumbling school facilities and housing units, became deeply entrenched in minds and hearts of Camden’s youth, thereby creating trends of educational failure that were cyclical in nature, reinforced both externally by social structures and institutions as well as internally within segregated communities of color.

Similarly, dysfunction soon became synonymous with segregated schools and low-income communities of color at the institutional level. School administrators and Boards of Education began to expect failure of students of color, stripping away any opportunity for such schools to prove otherwise. For example, Camden’s school leadership often designated rigorous curriculums and college-preparatory courses to majority-white schools, neglecting to extend the same opportunities to minority-majority districts. For example, in reporting on administrative conversations on the potential integration of Camden High School in 1963, Blaustein observes:

The maintenance of comprehensive academic tracks was recognized by administration as dependent on white students, implying students of color alone were not expected to sustain them: ‘if these pupils [white college preparatory students from the Cramer area] were transferred to Woodrow Wilson [a majority-Black high school located in the Stockton neighborhood], Camden High would be almost entirely a school for business instruction and training in industrial arts.[23]

It is vital to first provide context as to Blaustein’s usage of the terms “business instruction” and “industrial arts.” In utilizing these terms, Blaustein refers primarily to what is referred to as “vocational education” in modern-day America. With this crucial context firmly established, it becomes evident that public educators in early-1960s Camden viewed college education as a racially-exclusive opportunity, to be extended only to white students.

Such attitudes were reflected in the curricular rigor present in Camden’s minority-majority schools which were, to say the least, held to an extremely low standard. The lessons designed for children of color were incredibly simple and non-complex, as schools were treated less as institutions of learning and self-improvement, but rather as detention centers for the city’s disenfranchised youth. As Camden native and historian David Bain writes in the piece Camden Bound, “History surrounds the children of Camden, but they do learn a lot of it in school…Whitman is not read by students in the basic skills curriculum. Few students that I met in Camden High, indeed, had never heard of him.”[24] As such, Black and Hispanic students were effectively set up for failure as compared to white students, viewed as predestined to either not graduate from their primary schooling or to enter lower-paying careers and vocational fields rather than pursue higher education, and opportunities that college afforded students, particularly during this period where college degrees were significantly rarer and highly-valued than in the modern day.

Thus, it is evident that throughout the mid-twentieth century Camden’s public school system routinely failed Black and Hispanic students. From inequalities in school facilities and curriculum, Camden’s public school system repeatedly communicated to students in segregated areas that they simply were not worth the time and resources afforded to white students, nor possessed the same intellectual capacity as suburban children. Denied quality schools and viewed as predestined high school drop-outs, Camden’s public schools never truly invested in their children, creating an atmosphere of perpetual administrative negligence in improving schools and learning outcomes for the city’s disadvantaged youth. As Blaustein so aptly writes, “‘…the school authorities are against changing the status quo. They want to avoid headaches. They act only when pressures are applied’”.[25]

The Narrative of Inevitability: Camden as a “Lost Cause”

It is clear that such drastic disparities in learning outcomes arose not only out of administrative negligence, but also as a direct result of segregation within the city. While no law affirming segregation was ever passed in New Jersey, it is clear that schools in Camden were completely and unequivocally segregated, and that a hierarchical structure clearly existed in regards to determining which schools and student populations were most supported and prepared for success. Time and time again, educators favored white students and white schools, kicking students of color and their schooling communities to the curb. It is against this backdrop of negligence and resignation that wider narratives around the city of Camden and its youth as “lost causes” beyond any and all help began to emerge.

By the late twentieth century (specifically the 1980s and 1990s), narratives around Camden as a drug and crime-infested urban wasteland began to propagate, rising to a national scale in the wake of increasing gang activity and rapidly-rising crime rates in the area. While public focus centered on the city’s criminal justice department and woefully-inept political system, reporting on the state of Camden’s public schools served to reinforce perceptions of the city as destined for failure and beyond saving, chiefly through local press’ demonization of Camden’s youth. For example, the Courier Post article “Battle being waged to keep youths from crime”, reads, “‘Girls are being raped in schools, drugs are proliferating, alcohol is proliferating, and instead of dealing with it, some parents and administrators are in denial…they insist it’s not happening in their backyard’”.[26] The manner in this author speaks of public schooling in Camden reads as though the city’s schools and places of education were not learning communities, but rather prisons – the students inhabiting these spaces not children, but prisoners, destined to be nothing more than a “thug”.

Ignoring the city’s long history with racial segregation and redlining, which as established earlier in this paper, clearly resulted not only in disparities in learning outcomes but also caused a deep internalization of institutional failure within many students of color and their learning communities, articles such as this neglect the willingness to truly explore the roots of crime and poverty in Camden, focusing instead on the result of decades of institutional neglect of communities of color, rather than the root cause of these issues. In doing so, media coverage of such failures in Camden removed the burden of responsibility from the city lawmakers and school administrators responsible for abject poverty and educational disparities, instead putting the onus on the communities which were intentionally and perpetually disenfranchised at the institutional level across all aspects of Camden’s sociopolitical network.

Additionally, this article’s veiled assertion of Camden parents as disinterested and uninvested in their children’s success is especially gross and inaccurate. The fact of the matter is that parents and local communities within even the most impoverished and crime-ridden neighborhoods of Camden had long-lobbied for improvements to public schooling and their communities, concerned chiefly with their children’s futures and opportunities. For example, by the late 1990s, Camden City’s charter network had experienced significant growth, much of its early success owed directly to parents and grassroots organizations devoted to improving the post-schooling opportunities of disadvantaged children. In 1997, over seventeen new charters were approved by the city of Camden, the first opening in September of that year. The LEAP Academy University Charter School was the result of years of political lobbying and relentless advocacy, of which the loudest voices came from parents and community activist groups. Spearheaded by Rutgers University-Camden professor and city native, Gloria Bonilla-Santiago, the LEAP Academy included specific parent action committees, community outreach boards, and sponsored numerous community service events.[27] Thus, this inclusion of virtually one of the only groups truly invested in children of color’s success in Camden alongside the group which repeatedly conspired to confine them to crumbling schools and prepare them only for low-paying occupations is wildly inaccurate and offensive in a historical context, thereby demonstrating how media narratives around Camden and its school system repeatedly disregarded factually-correct reporting, in favor of sensationalized reports on Camden’s struggles, framing schools and city youth as ground zero and progenitors of the wider issues facing the city as a whole.

While community activism was absolutely present across Camden, it is also important to highlight the damaging impact of such negative narratives surrounding the city on its residents. In his book Camden Bound, a literary exploration of the history of Camden and its community, Camden-born historian David Bain highlights the internalization of damaging, sensationalized descriptions of Camden. He writes:

For most of my life, my birthplace, the city of Camden, has been a point of irony, worth a wince and often hasty explanation that though I was born in Camden, we didn’t actually ever live in Camden, but in a succession of pleasant South Jersey suburban towns…As I moved through life…I would write out the name Camden (I’m ashamed to name my shame now) with a shudder.[28]

While Bain’s Camden Bound does relate specifically to his own individual experience and struggle with the acknowledgement of his birthplace in the wake of national infamy, he spends perhaps even more time exploring the current state of the city, as well as the perspectives of current Camden residents. In recounts his most recent visit to Camden, Bain describes nothing short of absolute devastation and complete social blight and urban decay, writing:

Too many newspaper headlines crowd my brain – “Camden Hopes for Release From Its Pain”; “In Struggles of the City, Children Are Casualties”; “Camden Forces Its Suburbs To Ask, What If a City Dies?”; “A Once Vital, Cohesive Community is Slowly, but Not Inevitably, Dying.” And that devastating question from Time: “Who Could Live Here?”…It has been called the poorest city in New Jersey, and some have wondered if it is the poorest in the nation. Adult men and women stand or sit in front of their shabby two- story brick houses, stunned by purposelessness. In abandoned buildings, drug dealers and their customers congregate. On littered sidewalks, children negotiate through broken glass, condoms, and spent hypodermics.[29]

Judging from Bain’s simple description of the sights that he witnessed while driving through Camden, it is evident that Camden’s residents have been burned out by the widely-circulating narratives of the city and its national infamy. The vast majority of residents poverty-stricken and lacking the financial or social capital to create meaningful change for their communities themselves, such headlines and narratives of the city were nothing short of absolutely devastating. Such soul-crushing portrayals signal yet another air of perpetual negligence and resignation by powerful voices, within the media, local politics, and even national government, thus demonstrating a national perception of Camden as “failed”, and were thus internalized by Camden’s residents.

For example, in interviewing Rene Huggins, a community activist and director of the Camden Cultural Center, Bain chiefly relays her frustration with recent state legislation upon the assumption of office by Republican governor Christine Todd Whitman and recent rollbacks of welfare programs, occupational training, and educational funding that had been promised to the city. Speaking on the increasing hopelessness of many city residents, Huggins states, “And on top of all that…we get that headline in Time magazine – ’Who Could Live Here?’ Why not just give us a lot of shovels and bury the place?’”.[30] Such statements, alongside Bain’s experiences of Camden, thus demonstrate that as a direct result of national resignation to the state of Camden and a lack of willingness nor initiative to improve the city (and even more damaging, a removal of resources and social initiatives designed specifically to improve the state of the city), many Camden residents adopted a similar mentality of resignation and shame toward their community, choosing to simply exist with the city’s misery as opposed to creating any real, meaningful change, having been spurned and failed by various powerful sociopolitical institutions and organizations across generations, thereby reinforcing the harmful narratives that had played such a crucial role in the development of such behaviors.

The very article mentioned in ire by Ren Huggins, Kevin Fedarko’s “Who Could Live Here?”, also offers insight into public perceptions of Camden and more specifically, its youth, during the late twentieth-century. Written in 1992, Fedarko postures the city of Camden as a barren wasteland and its inhabitants – predominantly young people and children – as akin to nothing more than prisoners and criminals. For example, Fedarko writes:

The story of Camden is the story of boys who blind stray dogs after school, who come to Sunday Mass looking for cookies because they are hungry, who arm themselves with guns, knives and — this winter’s fad at $400 each — hand grenades. It is the story of girls who dream of becoming hairdressers but wind up as whores, who get pregnant at 14 only to bury their infants.[31]

Fedarko’s description of Camden’s children is extraordinarily problematic, in that it not only treats the city’s youth as a monolithic group, but then proceeds to demonize them en masse. In describing the city’s young people as baselessly sadistic and violent, while neglecting to position rising youth crime rates in the context of historical disenfranchisement nor take a moment and pause to acknowledge that this is not the case for all of the city’s young people, Fedarko’s work only furthers narratives of Camden and its young people as lawless and destined for jail cells rather than degrees. In particular, Fedarko’s description of Camden’s young women as “whores” is especially gross, considering the fact that the people of whom Fedarko speaks are children, thereby applying unnecessary derogatory labels to young women (largely women of color), while failing to acknowledge the true tragedy of Camden and the conditions to which young people are subjected to. In describing the situation of a teenager involved in gang activity, Fedarko also employs similarly disrespectful and dehumanizing language, writing:

…drug posses …use children to keep an eye out for vice- squad police and to ferry drugs across town. Says “Minute Mouse,” a 15- year-old dealer: “I love my boys more than my own family.” Little wonder. With a father in jail and a mother who abandoned him, the Mouse survived for a time by eating trash and dog food before turning to the drug business.[32]

Ultimately, it is evident that during the late twentieth century, specifically the eighties and nineties, narratives surrounding Camden portrayed the city as nothing more than an urban wasteland and lost cause, a sad excuse for urban existence that eschewed its history as a sprawling manufacturing juggernaut. More damaging however, were narratives surrounding the people of Camden (especially youth), who became synonymous with violence and criminal activity, rather than opportunity or potential. In short, media coverage of Camden was concerned chiefly with the concept of an urban space and people in chaos and thus, prioritized the spectacle of Camden’s failures over the historical tragedy of the city, neglecting to situation the former in the context of self-imposed de facto segregation and racialized disenfranchisement.

Conclusion

Ultimately, it cannot be denied that perceptions of Camden’s public education system as failing and its youth as morally debased were absolutely essential to the formulation of “lost cause” narratives regarding the city. In the popular imagination, Camden became synonymous with decay and dysfunction—a city transformed from a thriving industrial hub into what national headlines would later call “Murder City, U.S.A.” However, these narratives of inevitability in truth emerged from the city’s long history with racial segregation, economic turmoil, and administrative educational neglect. Camden’s schools were central to this development, acting as both products and producers of inequity, serving as clear symbols of the failures in public policy, which were later recast as moral shortcomings of disenfranchised communities themselves.

As demonstrated throughout this study, the structural roots of Camden’s failures in public education were grounded in segregation, manufactured by the same redlining maps and exclusionary residency policies that confined families of color to the city’s most desolate neighborhoods, which would also determine the boundaries of their children’s schools. White flight and suburban migration drained Camden of its capital and tax base, instead concentrating such resources in suburban communities whose already-existing affluence was only reinforced by federal mortgage programs and social support. Historical inquiry into urban decline and the state of urban communities in the postwar period have long since emphasized the importance of understanding urban segregation not as a natural social phenomenon, but rather an architectural inequity, extending into every aspect of civic life and education. Camden’s experience confirms this: segregation functioned not only as a physical division of space but as a moral and ideological one, creating the conditions for policymakers and the media to portray the city’s public schools as evidence of cultural pathology rather than systemic betrayal.

By the late twentieth century, these narratives had become fatalistic. Newspaper headlines depicted Camden’s classrooms as sites of chaos and its youth as violent, transforming real inequities into spectacle. The children who bore the weight of these conditions—students of color educated in crumbling buildings and underfunded programs—were cast as perpetrators of their city’s demise rather than its victims. The label “Murder Capital” distilled these complexities into a single, dehumanizing phrase, erasing the structural roots of decline in favor of a narrative that made Camden’s suffering appear inevitable. In doing so, public discourse not only misrepresented the city’s reality but also justified further disinvestment, as policymakers treated Camden’s collapse as a moral failure rather than a product of policy.

However, despite such immense challenges and incredibly damaging narratives that had become so deeply entrenched in the American national psyche regarding the city, Camden and its inhabitants persisted. Refusing to give up on their communities, Camden’s residents, many of whom lacking the influence and capital to create change alone, chose to band together and weather the storm of national infamy. From community activism to political lobbying, Camden’s communities of color demonstrated consistent self-advocacy. Viewing outside aid as perpetually-promised yet never provided, Camden’s communities pooled their resources and invested in their own communities and children, establishing vast charter networks as well as advocating for criminal justice reform and community policing efforts.

While change was slow and seemingly unattainable, Camden has experienced a significant resurgence in the past decade or so. From investment by major corporations and sports organizations (for example, the Philadelphia 76ers’ relocation of their practice facilities and front offices to the Camden Waterfront in 2016) as well as a revitalization of educational access and recruitment of teaching professionals by the Camden Education Fund, the city has slowly begun to reverse trends of decay and decline, pushing back against narratives that had deemed its failure as inevitable and inescapable. Celebrating its first homicide-free summer this year, Camden’s story is tragic, yet far from over. Rather than adhere to the story of persistent institutional failure and disenfranchisement, Camden’s residents have chosen to take charge of the narrative of their home and communities for themselves, changing it to one of perseverance, determination, and strength. In defiance of decades of segregation, disinvestment, and stigma, Camden stands not as America’s “Murder City,” but as its mirror—a testament to how injustice is built, and how, through resilience, effort, and advocacy, it can be torn down.

References

“The case for charter schools,” Courier Post, March 02, 1997

Bain, David Haward. “Camden Bound.” Prairie Schooner 72, no. 3 (1998): 104–44. http://www.jstor.org/stable/40637098

Beauregard, Robert A. Voices of Decline: The Postwar Fate of U.S. Cities. 2nd ed. New York: Routledge, 2003 http://www.123library.org/book_details/?id=112493

Blaustein, Albert P., and United States Commission on Civil Rights. Civil Rights U.S.A.: Public Schools: Cities in the North and West, 1963: Camden and Environs. Washington, DC: United States Commission on Civil Rights, 1964.

Douglas, Davison M. “The Limits of Law in Accomplishing Racial Change: School Segregation in the Pre-Brown North.” UCLA Law Review 44, no. 3 (1997): 677–744.

Fedarko, Kevin. “The Other America.” Time, January 20, 1992. https://content.time.com/time/subscriber/article/0,33009,974708-3,00.html.

Gillette, Howard. Camden after the Fall: Decline and Renewal in a Post-Industrial City. Philadelphia: University of Pennsylvania Press, 2005.

Goheen, Peter G., and Arnold R. Hirsch. “Making the Second Ghetto: Race and Housing in Chicago, 1940-1960.” Labour / Le Travail 15 (1985): 234. https://doi.org/10.2307/25140590.

Kozol, Jonathan. Savage Inequalities: Children in America’s Schools. New York: Broadway Books, 1991.

Rasmussen, Chris. “Creating Segregation in the Era of Integration: School Consolidation and Local Control in New Brunswick, New Jersey, 1965–1976.” History of Education Quarterly 57, no. 4 (2017): 480–514. https://www.jstor.org/stable/26846389

Rothstein, Richard. The Color of Law : A Forgotten History of How Our Government Segregated America. First edition. New York: Liveright Publishing Corporation, a division of W.W. Norton & Company, 2017.

Sugrue, Thomas J. The Origins of the Urban Crisis: Race and Inequality in Postwar Detroit. Princeton, NJ: Princeton University Press, 1996.

Tantillo, Sara. “Battle being waged to keep youths from crime,” Courier Post, June 8, 1998

Yaffe, Deborah. Other People’s Children: The Battle for Justice and Equality in New Jersey’s Schools. New Brunswick, NJ: Rivergate Books, 2007. https://search.ebscohost.com/login.aspx?direct=true&scope=site&db=nlebk&db=nlabk&AN=225406

[1] Peter G. Goheen and Arnold R. Hirsch. “Making the Second Ghetto: Race and Housing in Chicago, 1940-1960.” Labour / Le Travail 15 (1985): 234.

[2] Richard Rothstein. The Color of Law : A Forgotten History of How Our Government Segregated America. First edition. New York: Liveright Publishing Corporation, a division of W.W. Norton & Company, 2017.

[3] Peter G. Goheen and Arnold R. Hirsch. “Making the Second Ghetto: Race and Housing in Chicago, 1940-1960.” Labour / Le Travail 15 (1985): 234.

[4] Chris Rasmussen. “Creating Segregation in the Era of Integration: School Consolidation and Local Control in New Brunswick, New Jersey, 1965–1976.” History of Education Quarterly 57, no. 4 (2017): 480–514.

[5] Robert A. Beauregard. Voices of Decline: The Postwar Fate of U.S. Cities. 2nd ed. New York: Routledge, 2003.

[6] Thomas J. Sugrue. The Origins of the Urban Crisis: Race and Inequality in Postwar Detroit. Princeton, NJ: Princeton University Press, 1996.

[7] Howard Gillette, Camden after the Fall: Decline and Renewal in a Post-Industrial City (Philadelphia: University of Pennsylvania Press, 2005), 12–15.

[8] David Howard Bain, “Camden Bound,” Prairie Schooner 72, no. 3 (1998): 104–44.

[9] Chris Rasmussen,. “Creating Segregation in the Era of Integration: School Consolidation and Local Control in New Brunswick, New Jersey, 1965–1976.” History of Education Quarterly 57, no. 4 (2017): p.487

[10] Richard Rothstein, The Color of Law: A Forgotten History of How Our Government Segregated America (New York: Liveright, 2017), 70–75; Gillette, Camden after the Fall, 52–54.

[11] Gillette, Camden after the Fall, 45–50; Bain, “Camden Bound,” 110–12.

[12] Thomas J. Sugrue, The Origins of the Urban Crisis: Race and Inequality in Postwar Detroit (Princeton, NJ: Princeton University Press, 1996), 35–40.

[13] Beauregard, Robert A. Voices of Decline : The Postwar Fate of U.S. Cities. Second edition. New York: Routledge, 2003, 91

[14] Gillette, Camden after the Fall, 50–55; Bain, “Camden Bound,” 120.

[15]Albert P. Blaustein, Civil Rights U.S.A.: Camden and Environs, report to the U.S. Civil Rights Commission, 1963, 22.

[16] Blaustein, Civil Rights U.S.A., 23–24.

[17]Davison M. Douglas, “The Limits of Law in Accomplishing Racial Change: School Segregation in the Pre-Brown North.” UCLA Law Review 44, no. 3 (1997)

[18] Blaustein, Civil Rights U.S.A., 18.

[19] Blaustein, Civil Rights U.S.A., 18.

[20] Blaustein, Civil Rights U.S.A.,

[21] Kozol, Jonathan. Savage Inequalities : Children in America’s Schools. New York: Broadway Books, an imprint of the Crown Publishing Group, a division of Random House, Inc., 1991.

[22] Blaustein, Civil Rights U.S.A., 22.

[23] Blaustein, Civil Rights U.S.A.,

[24] Bain, David Haward. “Camden Bound.” Prairie Schooner 72, no. 3 (1998): 120-121.

[25] Blaustein, Civil Rights U.S.A.,

[26] “Battle being waged to keep youths from crime,” Courier Post, June 8, 1998

[27] Sarah Tantillo, “The case for charter schools,” Courier Post, March 02, 1997

[28] Bain, Camden Bound, 108-109.

[29] Bain, Camden Bound, 111.

[30] Bain, Camden Bound, 119.

[31] Kevin Fedarko, “The Other America,” Time, January 20, 1992

[32] Ibid.